Which AI Deep Research Is the Best?

We're in early 2026 -- which Deep Research mode beats the rest?

Hey, y’all – Sherveen here.

OpenAI released an update to their Deep Research feature last week (now fueled by GPT-5.2). So, I thought it’d be a good time to begin a new AI showdown series: which AI deep research product is the best right now?

I ran the same set of queries against mixed sets of 9 products from:

Anthropic (Opus 4.6 with Research)

Google (Deep Research w/ Gemini 3 Pro)

OpenAI (ChatGPT Deep Research w/ GPT-5.2)

Wild cards from Perplexity (Deep Research), Manus (1.6 Max), Moonshot AI (Kimi 2.5 DR/Agent), Z[dot]ai (GLM-5 Agent), and MiniMax (M2.5 Agent).

As we go, I’ll provide links to the full chat response for each result.

Reminder: deep research (DR) is an agentic mode available in pretty much every state-of-the-art AI chat app. It focuses on intensive exploration and discovery about a topic through hyper-extensive web searches, fetching of data + primary sources with citations, and planning and reasoning to empower relevant results.

This could be for a social or scientific studies question, deep product discovery and comparison, or business and market research.

Where a model-maker doesn’t have a DR product available, I’ll use their agent modes. If a result was plainly not worth talking about, I’ll exclude that model from discussion.

And for ChatGPT, we’ll include Deep Research and 5.2 Pro. While 5.2 Pro isn’t a dedicated research product, it’s a highly agentic, long-inference chat model available on OpenAI’s $200/month tier. It does intensive research while still being more interpretive and conversational, so we’ll see how it does against DR pipelines!

One caveat: I’m not expert on most of the domains down below. I am using a mixture of context clues and source reading to validate that the responses aren’t blatantly wrong. Wrongness in deep research pipelines is a nuanced topic for a different day, and generally solvable within the same product harness, so… as unintuitive as it might sound, it’s somewhat a side topic when it comes to today’s comparisons.

Let’s dive in.

Test 1: Asking a broad question

This is the kind of question we often ask LLMs: we want a conclusion, but we want that conclusion to be well-evidenced, too.

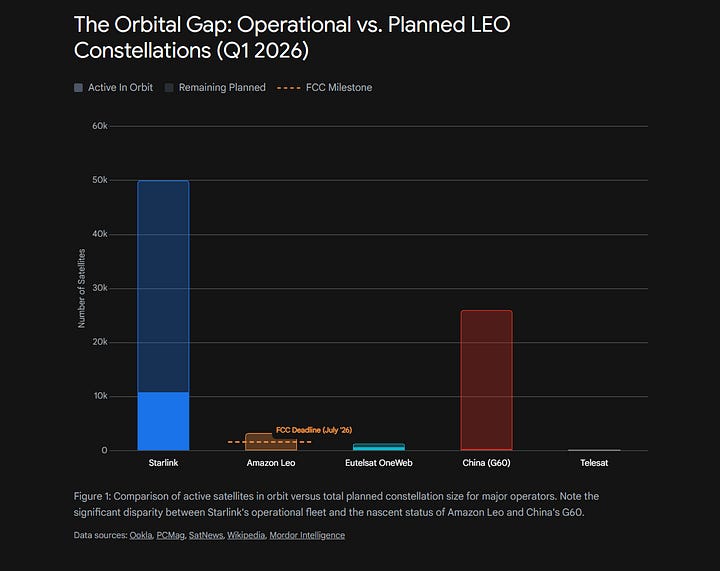

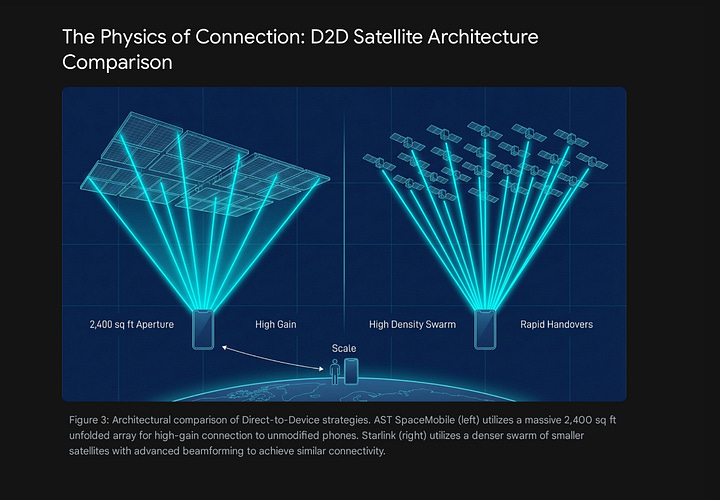

I’ve long been curious about what seems like Starlink’s very long lead in the satellite telecom and internet market. It seems like a very dubious thing to have one company hold so much necessary capacity for the world.

Can you do a deep exploration of the market -- emerging competitors, nearest in-market alternatives, differences in capability and feature sets, and the nuances throughout? Would love an analysis of this market and what it will look like over the next few years.

Here is the chain-of-thought I had analyzing the results:

Perplexity, Kimi, and MiniMax all suffered the same issue: they cite a lot of stats and give you a lot of facts, but they’re meandering and tend to over-rely on secondary sources (like third party blog posts).

GLM-5 is the first strong response. We get hits of everything important: from details on Starlink’s products to a good overview of its competitors, and the geopolitical + strategic dynamics playing out. But – it reads like a textbook.

Gemini’s DR is very ‘consultant’ coded. Not a bad thing! It’s a structured document with a lot of framing and definitions, plus generated graphics and charts that are hit-and-miss (below).

Here, you’ll feel that Gemini’s deep research mode always struggles between interpreting the user prompt versus following the system’s instructions. In the response, we see it say: “The user’s query regarding ‘dubious capacity’ touches on a future risk: Oversupply.” In practice, this means it’ll often refrain from its own synthesis or conclusion-drawing.

Claude’s result is by far the most readable, which will be a recurring theme. It has a great writing pace and tone, and reads the most like someone’s Substack.

It’s also the most opinionated. Not in a big way, but it’s more likely than the others to highlight conclusions that it deems important to notice.

Example (below) — compare how we learn about legacy satellite player Eutelsat OneWeb in Gemini (left) versus Claude (right).

ChatGPT’s DR is a bit of a mix of Gemini and Claude. It’s far more thorough than Claude, almost as willing to draw conclusions, but less readable. It has Gemini-like qualities in its structure: like a consultant wrote it, framing the problem at the top, diving into a long comparison of markets and features, and closing with opinionated forecasts — with several generated tables along the way.

ChatGPT’s 5.2 Pro does diligent research, just like everyone else. The result, however, reads far more like a conversational LLM.

In fact, the response begins with immediate synthesis: defining four overlapping categories in the market race that it then uses to frame the rest of the research. This response gets very specific as to where Starlink is today, why it’s ahead, and on which vectors it’s most vulnerable.

🏆 Winner: GPT-5.2 Pro. While Claude’s opinionated readability is easy on the eyes and ChatGPT’s DR provides an analyst’s flair, 5.2 Pro does still-thorough research while really providing framing and context to the query.

Test 2: Asking about modern science

This type of question gets at two nuances: (1) how good are these researchers at retrieving hyper-recent results and (2) how good are they at understanding what qualifies as scientifically ‘worthy’? Our prompt:

I’d be curious to learn all about the recent scientific progress being made re: male pattern baldness. What are the recent promising findings, studies, experiments, tests, etc. worth knowing about?

Here is the chain-of-thought I had analyzing the results:

Perplexity is fine. It’s not wrong (from what I can tell), but I can’t click on inline sources, there isn’t a lot of progressive claim building, and it feels like a bulleted list I have to vet myself.

Claude feels like a fast-talking expert. It’s well-cited and does good work framing the progress of the science. We learn about promising drugs, RNA and gene techniques in early development, and cell therapy techniques gaining traction in Asia. But it’s definitely a dense read meant for someone who wants max science.

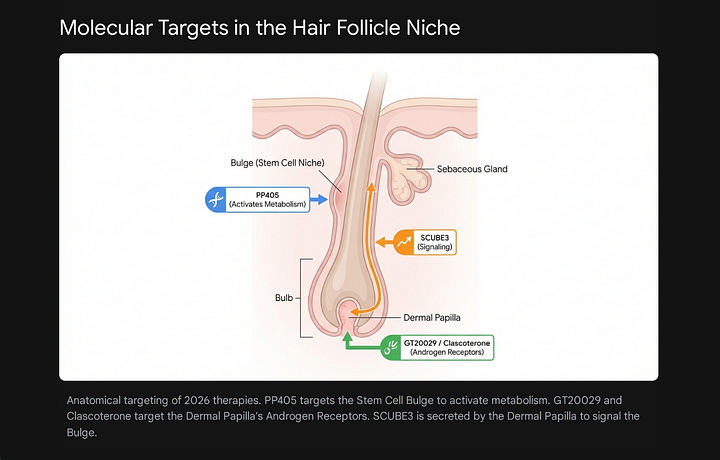

Gemini’s answer feels like that of an educator-scientist. We start with a great diagram of a hair follicle. As we learn about new medications and interventions, Gemini begins each section with an explanation of the base science (below). Gemini is the only model to cite TissUse, a unique “smart organ-on-chip” technology, but it’s also the only model to miss on VDPHL01, a seemingly important evolution of oral minoxidil.

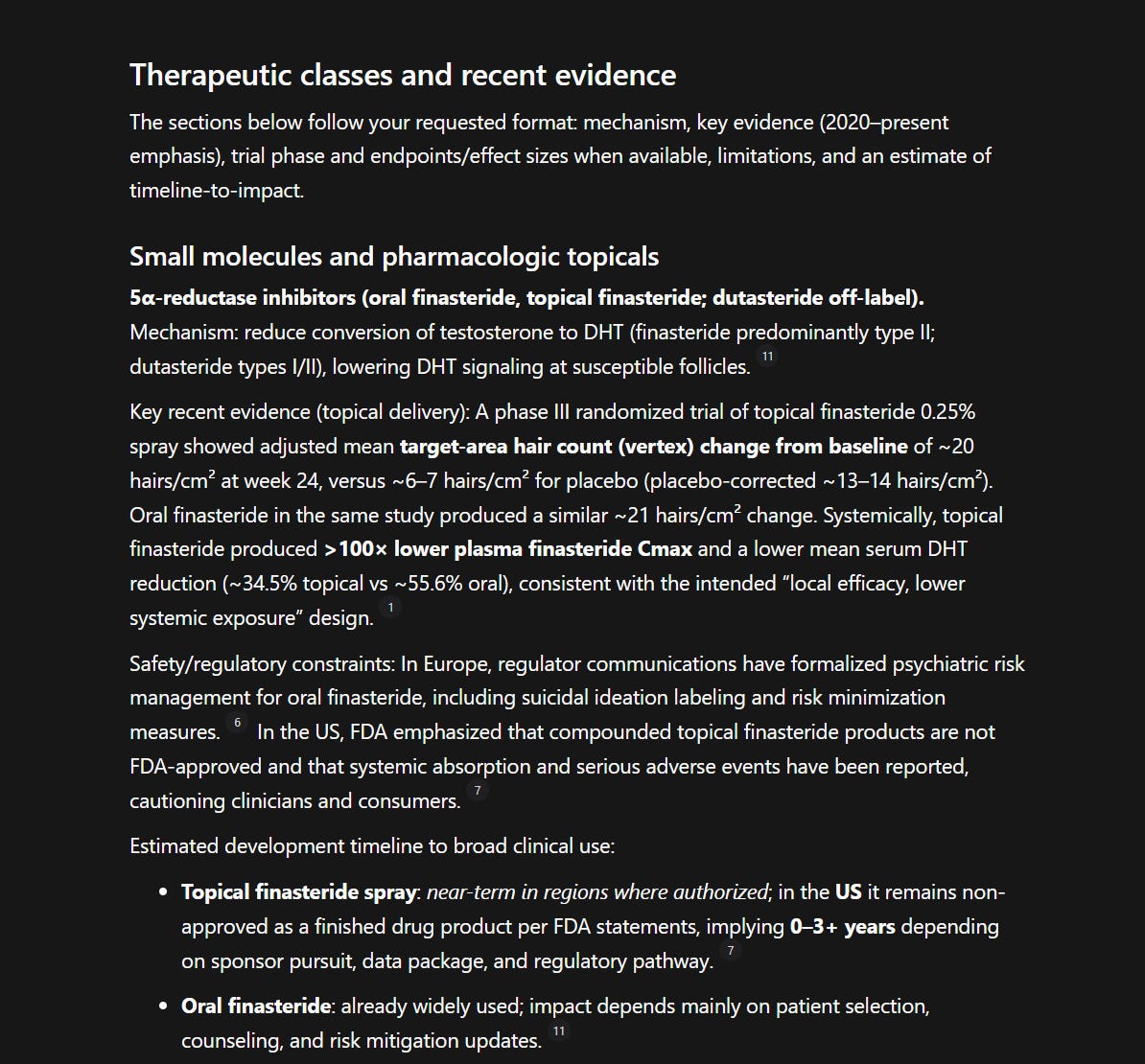

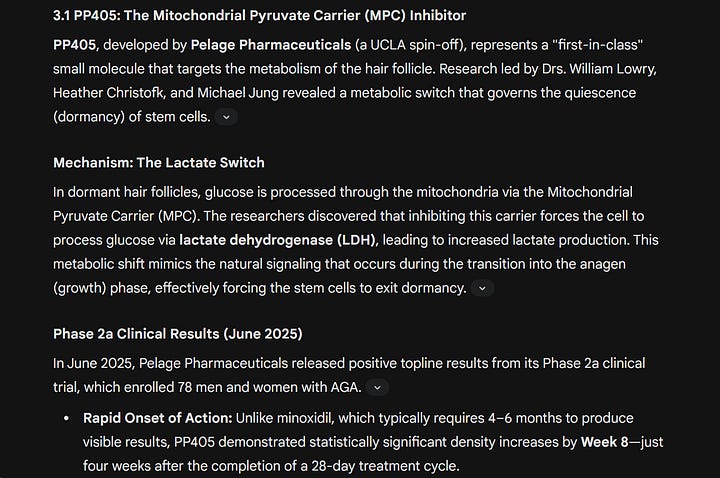

ChatGPT DR is the scientist’s scientist. OpenAI’s products, as always, are diligent at web search, using multiple sources to validate and verify a conclusion. The language has the most technical density, which means this winds up being the least layperson-readable of the 3 results.

However, there’s a section where the response suddenly anchors to the user prompt more tightly, and we get practical takeaways as a result. “The sections below follow your requested format: mechanism, key evidence (2020–present emphasis), trial phase and endpoints/effect sizes when available, limitations, and an estimate of timeline-to-impact.” (below)

Perhaps due to adherence to my prompt, it spends the least amount of time detailing therapies and interventions that are still 5+ years away. It names them, but it doesn’t spend as much time on them.

ChatGPT’s 5.2 Pro response is thorough and highly readable, hitting on every relevant and near/mid-term trial and drug, and ending with the upcoming data and studies to watch for.

🏆 Winner: GPT-5.2 Pro. Once more, it did research as thoroughly as the dedicated DR products, but had the sort of framing and readability that maximizes learning. It’s DR with bedside manners.

Test 3: Asking about influencer science

I just pressed play on the episode, but I’m already intrigued by an initial claim in the first 30second teaser of this podcast -- Dr. Michael Breus was just on the Diary of a CEO, and he says there are four sleep chronotypes that dictate not just when it’s best for you to sleep, but also when it might be best for you to have coffee or to learn complicated concepts. He says there are three that are widely recognized, and he’s kind of unraveling a fourth. What’s the real science here?

All tested models did an excellent job in retrieving studies and primary sources.

Claude was quick and readable: Breus’s general framing is generally not peer-reviewed, and his novel addition to the existing and validated sleep chronotype framework is probably not a real thing.

Gemini’s deep research is a bit friendlier to Dr. Breus, suggesting that his fourth chronotype may be a thing related to a validated “hyperarousal model of insomnia,” but like Claude, it’s not sure if this should be a chronotype or if it’s a disorder.

Gemini is also friendlier to its finding that Dr. Breus recommends a 90 minute delay for morning caffeine. Claude also finds this in its research and points out that Dr. Breus is relying on a mechanistic effect that doesn’t have empirical validation (aka studies saying it actually works).

I don’t like how unopinionated Gemini is being here – even though its response is far more thorough than Claude’s in terms of education, analogies, examples, and practical descriptions. At the core of my question… is the science right, or isn’t it!?

I could just ask the LLM’s in their normal mode if I’m looking for more interpretation, but remember that the LLMs in their normal mode are much worse at doing exhaustive research, and so I’d lose the benefit of their fact-finding.

ChatGPT’s DR is the platonic ideal of deep research here. It doesn’t treat the reader with kid gloves. Instead, it does sequential building of information to equip the reader with deep knowledge by the end.

Beyond looking at the science, it looks up multiple press hits from Dr. Breus over the past decade, including interviews from 2016 where he first proposed his fourth chronotype. And that leads to a useful conclusion: “Given the marketing context (quiz plus product ecosystem) and narrative style, the most defensible characterization is that the Breus framework is primarily a popular synthesis + coaching heuristic, potentially informed by clinical experience, rather than a published, independently replicable empirical typology.”

ChatGPT’s 5.2 Pro is a great “walkaway skim” version of the other three responses – but the other three are meaningfully more in-depth this time.

🏆 Winner: ChatGPT Deep Research. We have the right mix of the right research, thoroughly, with the right takeaways. Again, we want our DR pipelines to be thorough — but it’s still a combination of receipts, teaching, and willingness to “land the plane” when it comes to the original prompt.

Test 4: Asking about the numbers

Certain people in tech lie about college admissions numbers to feed political narratives — it’s pervasive and malicious. So, I asked the different research modes to help me find the data to combat those lies. Prompt (excerpt):

I need a comprehensive, well-cited breakdown of international versus domestic enrollment at top US universities, split by year and by level. We may need to search institutional archives, fact books, or registrar reports. Schools: Harvard, Stanford, MIT, Yale, Columbia, University of Chicago. Let’s grab: current international student % at each school, sub split by 1974-1975, 1994-1995, and 2023-2024 (or nearest years where we can find reliable data), sub split in those zones by undergrad versus grad.

This is a challenging query because it’s not just about deep digging and finding of primary sources. Not all of the data will be readily available or printed on a website. Instead, the models will have to extract specific numbers from specific years at different colleges.

To do this successfully, the agents will have to plan a mode of research that hits different cohorts of data, dig through archival documents and PDFs, find alternate sources after running into roadblocks, and adjust along the way.

ChatGPT DR struggled here. Although it’s a thorough web crawler, it isn’t dynamic enough (perhaps not even enabled) to download relevant files, extract information using code or vision, and use complex interfaces.

GPT-5.2 Pro was a little better, but surprisingly, it wasn’t as agentic as what I believe was o1-preview when I asked this same question last year.

Claude made a far more robust attempt, especially after a second encouraging query. By the end, we got a useful Excel sheet with confidence intervals per stat based on the quality of the origin data. I think we’re seeing Anthropic’s focus on file-handling come into play here, enabling better ingestion of docs during research and the production of new artifacts as part of the response.

Perplexity looks interesting on the surface, until you dig in and notice that it’s mostly secondary sources or estimations of data.

Both Gemini and Manus found a lot of adjacent, disconnected data that ultimately didn’t round up well into a cohesive view of the situation.

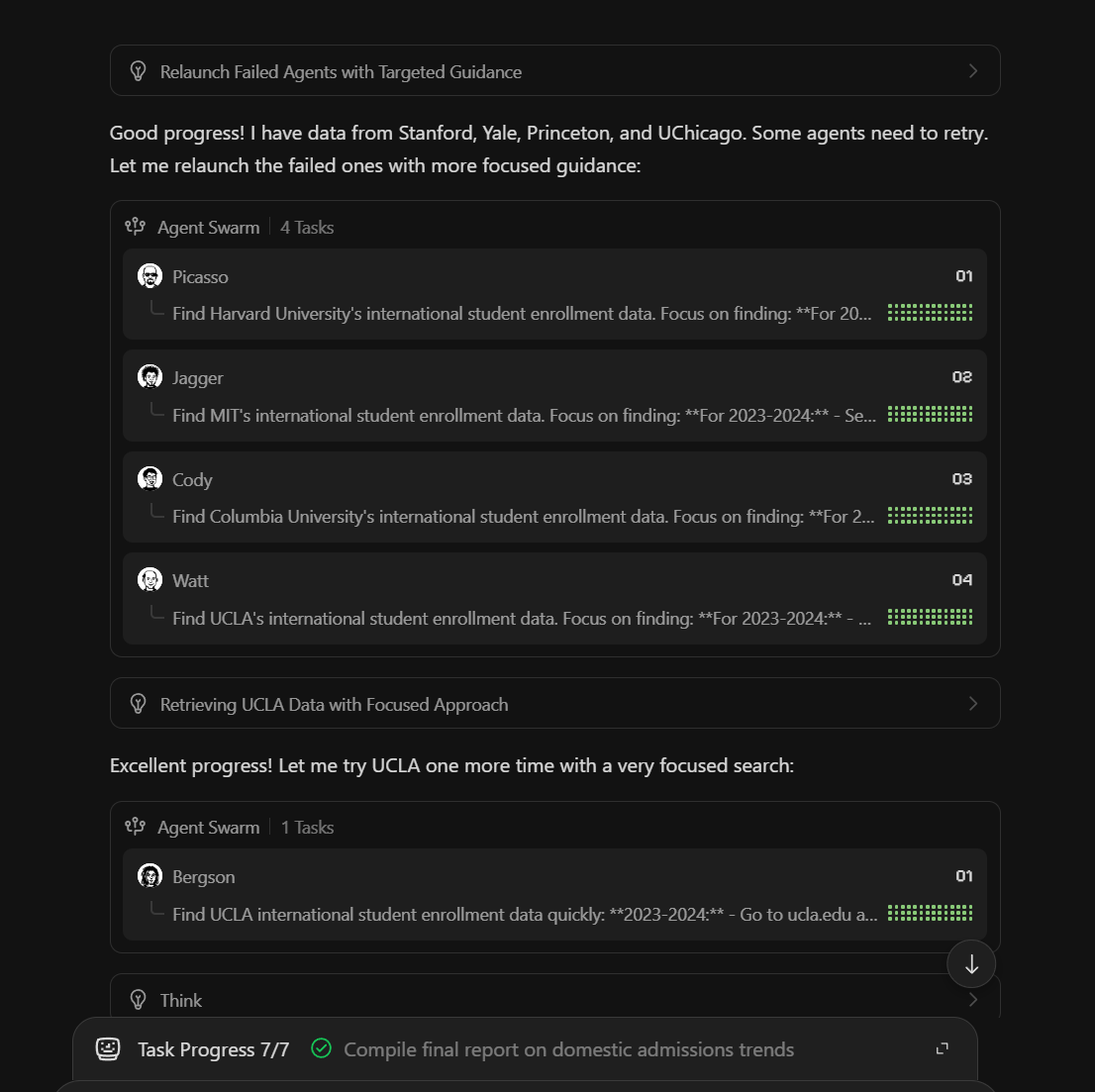

The dark horse here: Kimi 2.5 in Agent Swarm mode. This allowed the main Kimi agent to spin up several parallel subagents to perform per-school research (below). As rounds of subagents found more info or hit new roadblocks, it would spin up new subagents to retry places where the research failed. Ultimately, we received the most comprehensive set of files with the most data, and where it couldn’t find precise data, it found its nearest neighbor and noted it.

🏆 Winner: Kimi 2.5 Agent Swarm. Multi-agent systems are probably at play in the background with several of the AI products we use today, but Kimi’s product most explicitly uses that architecture today. By running many agents in parallel, each is less likely to get exhausted or move on to do another task, and we can see the value of that atomic focus within these results.

Test 5: Asking for niche product research

Repo Prompt is a tool for gathering context and code into a neat “package” to transfer to outside AI agents for advice (basically, ‘take these large files and reorganize them so I can paste them somewhere else’). Which of these DR products will be best at finding alternatives – especially given that I didn’t define Repo Prompt in my query, and it’s a relatively modern tool?

What are all of the alternative tools and products to Repo Prompt? Let’s be comprehensive and thorough, finding even newer/emerging startups and open source projects. Thank you! <3

Perplexity and MiniMax just gave us big lists with no real interpretation.

Gemini was, as always, consultant-y and educational. But it got way too intellectual about the prompt, highlighting philosophical approaches that startups and coding tools in general are taking to the ‘code context packing’ problem. Really not what we were looking for!

Claude looked through 529 sources and curated a tight list with very good summarizing descriptions alongside each tool.

ChatGPT’s DR provided a very thorough list, complete with tables highlighting features, differentiation, and a comparison matrix. There’s enough information along the way for a reader to select a few to try across spikey categories.

GPT-5.2 Pro created a smart bucketing of product categories and added one-liners to each, but lacked the usual commentary I appreciate the Pro model for providing.

To be fair to the “they just gave me a list” answers above… that is what I asked for.

🏆 Winner: ChatGPT Deep Research. It found the most literal answers while still providing thorough comparisons and relative descriptions. In other words, we can walk away feeling it was comprehensive and dense-but-still-actionable.

Winner, winner, chicken dinner

If you’re trying to figure out where to spend your subscription money or time, there’s a clear pair of winners depending on how literally you take the category: ChatGPT Deep Research or GPT-5.2 Pro.

But this experiment validated something more important for me: having multiple subscriptions. In my regular AI-using life, I send a majority of my queries to multiple LLMs, and I can’t imagine not getting the different flavors of answer that exist even across our samples above.

Because I appreciate and value Claude’s spunky writing and willingness to really address the main question, even if it’s in research mode, and I find it most willing to use its research to help me out with a ready-made conclusion.

And I appreciate Gemini’s thoroughness and educational style. It almost strips away your prompt and comes up with a “normalized” query that removes any opinion-having at all in favor of consultant/textbook-style rigor.

And it’s really useful to toss Kimi’s Agent Swarm mode at a problem that requires brute-force compute power and subagents to retrieve really specific data, with an orchestrating agent coordinating so that I can look away.

But there is a winner here and it shouldn’t surprise any power user: OpenAI’s models, as always, are supreme at using the web.

They are the most agentic, given the longest leash to scour for sources, and act with real agency along the way. I’ll cover this in more depth in a future piece, but if you look at the reasoning traces in the chat logs above, you’ll notice both GPT-5.2 DR and 5.2 Pro reckoning with the information they find — using it to dynamically decide what else they should know, what else might be important, and how to change or execute on their plans accordingly.

In other words, they use the web how I use the web.

If it’s part of your budget to subscribe to the Pro plan, you should always run both. You’ll appreciate 5.2 Pro for giving you an extra layer of framing and conversation that you’ll miss when using any of the pure DR products.

If you’re looking to know which generally-accessible Deep Research mode is best amongst the foundational chat applications, ChatGPT is your winner. 🏆

For now, OpenAI sits atop the DR pile. But updates to this kind of harness product can come fast and furious, so come on back soon — I’ll make this a ~monthly check-in for us to stay researched on as we go. :)